可解释AI

项目简介:可视化中的可解释人工智能研究旨在可视化人工智能模型及其决策过程,以提升人们对算法的理解与信任度。本组在该方向已有许多工作,包括针对基于BERT的开放域问答模型的视觉解释(TVCG

2023),自动驾驶中可解释目标检测的时空可视分析方法(TVCG 2022)以及支持深度模型特征选择的交互式可视化方法研究(PacificVis 2022

poster)等。

Project Introduction: Explainable AI in visualization aims

to visualize AI models and their decision processes to improve understanding and

trust in algorithms. There have been many works in this direction in our group,

including visual explanation for open-domain question answering (TVCG 2023), a

spatio-temporal visual analysis approach for interpretable object detection in

autonomous driving (TVCG 2022) and an interactive visualization approach for feature

selection on deep learning models (PacificVis 2022 poster), etc.

相关发表

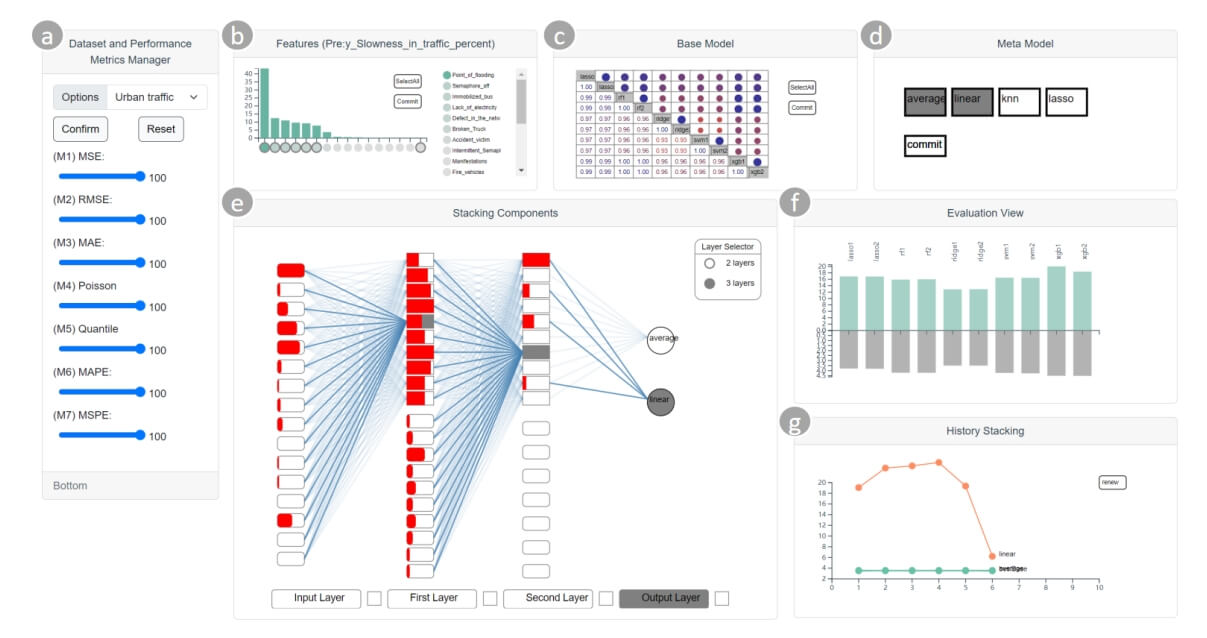

InterpretStack: Interpretable Exploration and Interactive Visualization Construction of Stacking Algorithm.

Yu Wang, Jing Lu, Le Liu, Junping Zhang, Siming Chen*.

VLDB Workshops 2024.

Yu Wang, Jing Lu, Le Liu, Junping Zhang, Siming Chen*.

VLDB Workshops 2024.

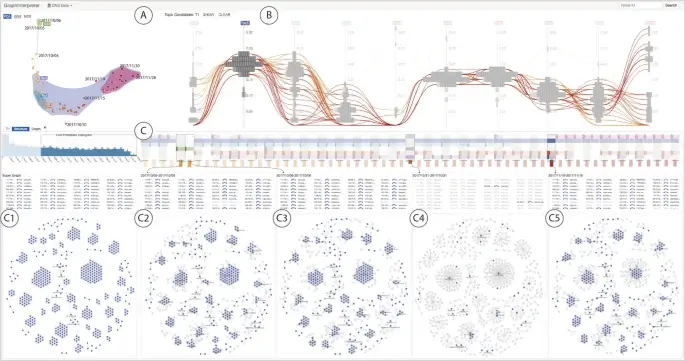

GraphInterpreter: a visual analytics approach for dynamic networks evolution exploration via topic models.

Lijing Lin, Jiacheng Yu, Fan Hong, Chufan Lai, Siming Chen, Xiaoru Yuan

Journal of Visualization, 2024.

Lijing Lin, Jiacheng Yu, Fan Hong, Chufan Lai, Siming Chen, Xiaoru Yuan

Journal of Visualization, 2024.

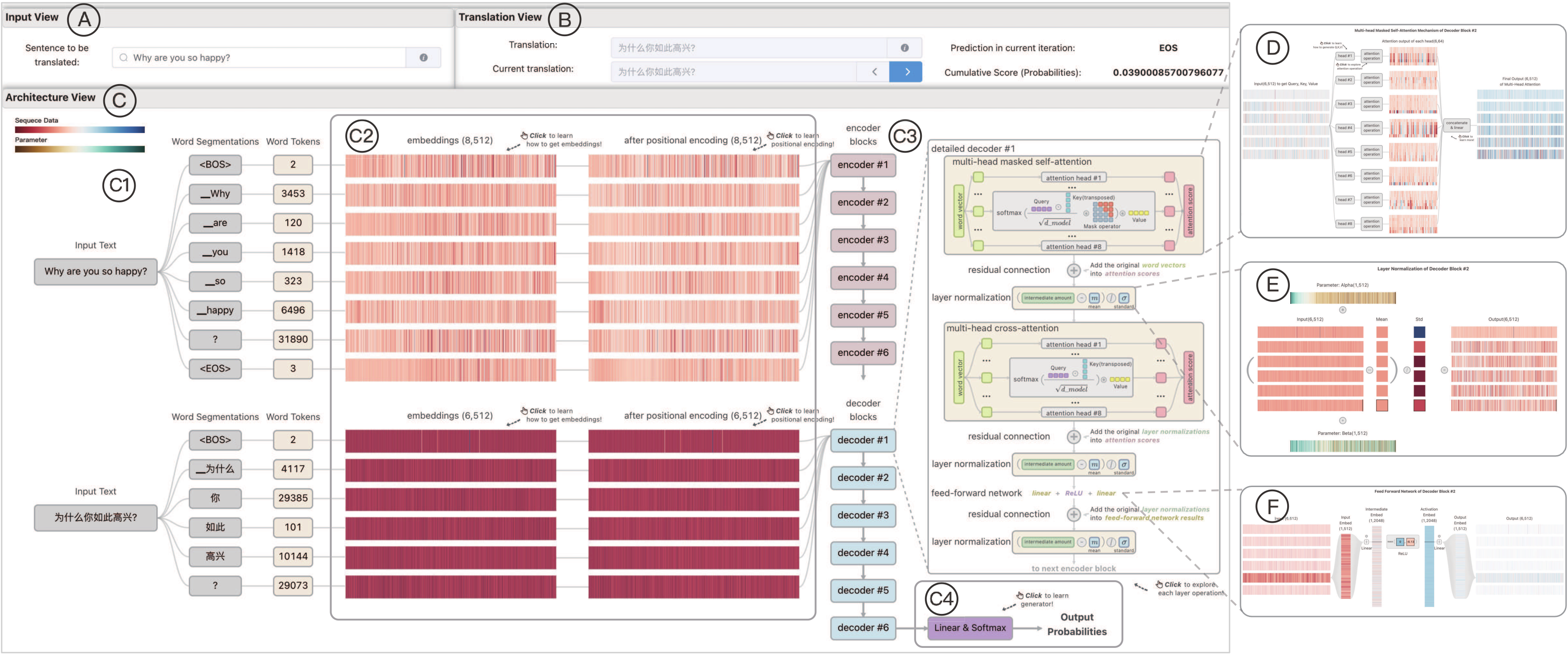

TransforLearn: Interactive Visual Tutorial for the Transformer Model.

Lin Gao, Zekai Shao, Ziqin Luo, Haibo Hu, Cagatay Turkay, Siming Chen*.

IEEE Transactions on Visualization and Computer Graphics (VIS'23), Accepted, 2024.

Lin Gao, Zekai Shao, Ziqin Luo, Haibo Hu, Cagatay Turkay, Siming Chen*.

IEEE Transactions on Visualization and Computer Graphics (VIS'23), Accepted, 2024.

Interpreting High-Dimensional Projections With Capacity.

Yang Zhang, Jisheng Liu, Chufan Lai, Yuan Zhou, Siming Chen*.

IEEE Transactions on Visualization and Computer Graphics (TVCG), Accepted, 2023.

Yang Zhang, Jisheng Liu, Chufan Lai, Yuan Zhou, Siming Chen*.

IEEE Transactions on Visualization and Computer Graphics (TVCG), Accepted, 2023.

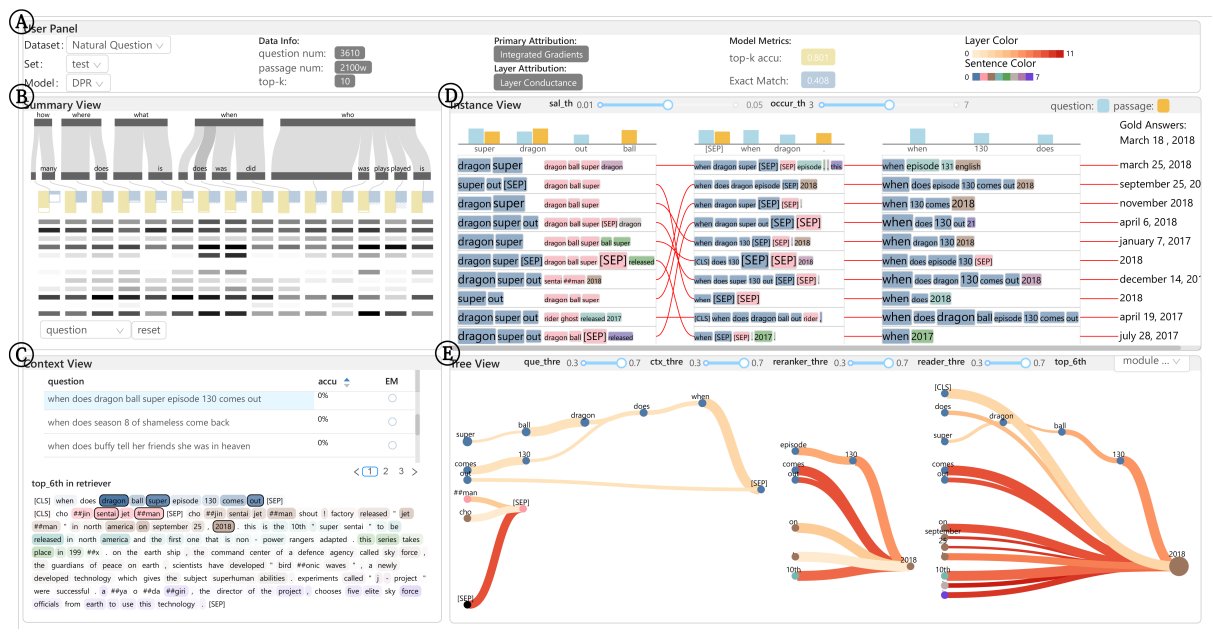

Visual Explanation for Open-domain Question Answering with BERT.

Zekai Shao, Shuran Sun, Yuheng Zhao, Siyuan Wang, Zhongyu Wei, Tao Gui, Cagatay Turkay, Siming Chen*.

IEEE Transactions on Visualization and Computer Graphics (TVCG), Accepted, 2023.

| Paper | pdf (14.0MB) | Video | mp4 (25.3MB)

Zekai Shao, Shuran Sun, Yuheng Zhao, Siyuan Wang, Zhongyu Wei, Tao Gui, Cagatay Turkay, Siming Chen*.

IEEE Transactions on Visualization and Computer Graphics (TVCG), Accepted, 2023.

| Paper | pdf (14.0MB) | Video | mp4 (25.3MB)

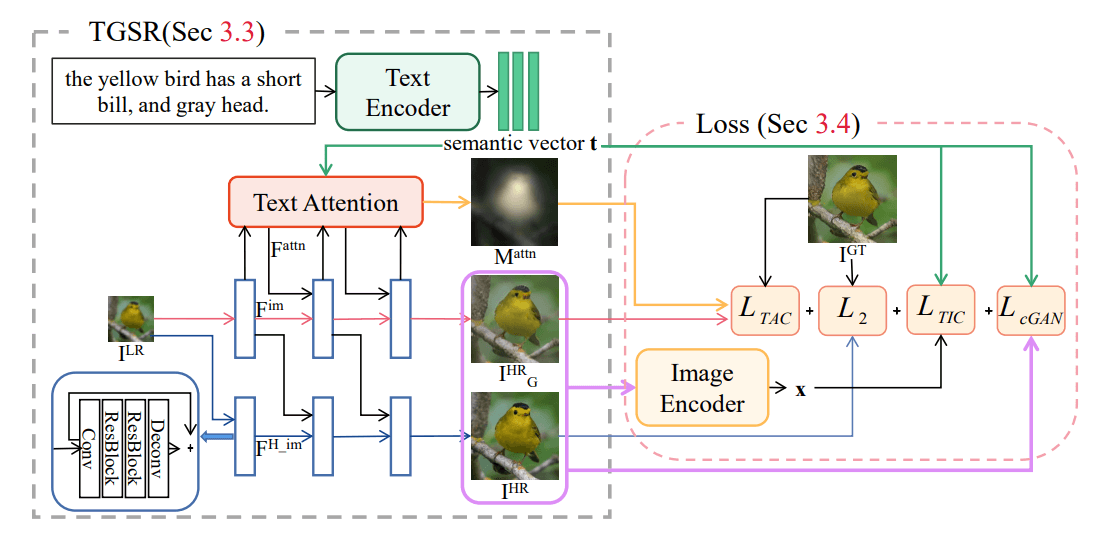

Rethinking Super-Resolution as Text-Guided Details Generation.

Chenxi Ma, Bo Yan, Qing Lin, Weimin Tan, Siming Chen.

ACM Multimedia 2022 (ACM MM'22), Accepted, 2022.

| Paper | pdf (4.0MB)

Chenxi Ma, Bo Yan, Qing Lin, Weimin Tan, Siming Chen.

ACM Multimedia 2022 (ACM MM'22), Accepted, 2022.

| Paper | pdf (4.0MB)

- © FDU-VIS All rights reserved. 2026